Distributed computing refers to a system in which multiple computers or processors work together to perform a task. This approach is often used in distributed systems, where tasks are divided into smaller pieces and distributed across multiple nodes, allowing for faster processing and improved reliability. In today's blog post in Architect Guide, we will focus on distributed computing in distributed systems.

Often in technical interview, I have noticed that many candidates when asked about Distributed systems, were unable to bring distributed computing to the table. If asked specifically, they were unable to explain the concepts. It is very important to understand the concept of distributed computing in order to understand the distributed systems correctly. We will also cover it from Microservices perspective. Before we move forwards, let's look at some of the definitions of distributed computing.

Distributed computing refers to a computing model in which multiple computers work together to solve a problem, with each computer having its own processing power and memory. The computers communicate with each other to coordinate their efforts and share data, allowing for faster processing and improved scalability."

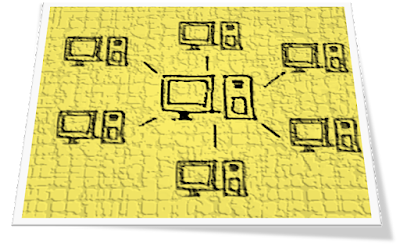

Distributed computing is a type of computing architecture in which a group of interconnected computers work together to solve a single problem or perform a set of related tasks. The computers may be physically located in different geographic locations, and they communicate with each other over a network to share resources and data.

Distributed computing is a method of computing that utilizes multiple processors or computers to work together on a single task or problem. Each processor or computer performs a portion of the computation and communicates with the others to coordinate their efforts. The result is a faster and more efficient processing of data and computation.

Why Distributed Computing?

Distributed computing is used to solve problems that require significant computing resources or cannot be solved by a single computer. There are several reasons why distributed computing is necessary:

Scalability:

As the size and complexity of data and computations grow, it becomes challenging for a single computer to handle them. By distributing the computation across multiple machines, the system can scale horizontally, allowing for more significant amounts of data and computations to be processed.

Performance:

By distributing the computation across multiple machines, the system can take advantage of the parallel processing capabilities of multiple processors or cores. This can result in significant performance improvements compared to running the computation on a single computer.

Fault tolerance:

Distributed computing systems can be designed to be fault-tolerant, meaning that if one node fails, the system can continue to operate by redistributing the workload to other nodes. This increases the reliability and availability of the system.

Geographical distribution:

Distributed computing can enable data and computation to be processed across multiple locations or regions. This can be particularly important for applications that require low latency or high availability across a wide geographical area.

Distributed computing is necessary for solving complex problems that require significant computing resources, scaling, and high availability. The ability to distribute computation across multiple machines enables organizations to process larger amounts of data, achieve better performance, increase fault tolerance, and better support their business needs. Let's now look at some of the real-world example of systems that utilize distributed computing to achieve their goals.

Google Search: Google's search engine uses distributed computing to process search queries and rank search results. The system distributes the search query across multiple machines, which then each process a portion of the search results and return them to the user. This allows for faster processing of search queries and improved search accuracy.

Amazon Web Services:

Amazon Web Services (AWS) is a cloud computing platform that utilizes distributed computing to provide scalable and flexible computing resources to businesses and individuals. AWS allows users to spin up virtual machines, databases, and other resources across multiple geographic regions, and distributes the workload across multiple servers to ensure high availability and reliability.

Bitcoin:

The Bitcoin cryptocurrency relies on a distributed computing system called the blockchain to verify transactions and create new coins. The blockchain is a distributed ledger that contains all the transaction records, and each block in the blockchain is verified and added by a network of computers working together to solve complex mathematical problems. This ensures the security and integrity of the Bitcoin network.

Weather forecasting:

Weather forecasting models require significant computing resources to simulate weather patterns and predict future weather conditions. Distributed computing is used to distribute the workload across multiple computers and process the vast amounts of data required for weather forecasting. This allows for more accurate and timely weather predictions.

Distributed computing is used in a wide range of systems and applications to improve performance, scalability, and reliability. From web services to scientific simulations, distributed computing plays a crucial role in enabling many modern technologies and services.

Distributed Computing - How to achieve?

Here are some of the steps that you need to follow in order to achieve distributed computing for your application.

Define the problem:

The first step in distributed computing is to clearly define the problem that needs to be solved. This involves breaking down the problem into smaller tasks that can be distributed across multiple nodes.

Identify the nodes:

Once you have defined the problem, you need to identify the nodes that will be used to solve it. Nodes can be physical computers, virtual machines, or containers.

Determine the communication model:

You need to decide how the nodes will communicate with each other. There are two main models: message passing and shared memory. In message passing, nodes communicate by sending messages to each other, while in shared memory, all nodes have access to a shared memory space.

Choose a distributed computing framework:

There are many distributed computing frameworks available, including Apache Hadoop, Apache Spark, and Apache Storm. Choose a framework that best suits your needs and the problem you are trying to solve.

Design the system architecture:

Once you have chosen a framework, you need to design the system architecture. This involves deciding how the nodes will be connected, how data will be distributed, and how the tasks will be executed.

Implement the system:

Once the architecture has been designed, you can start implementing the system. This involves writing code that will run on each node and perform the required tasks.

Test and optimize:

Once the system has been implemented, you need to test it to ensure that it works as expected. You may also need to optimize the system to improve performance or scalability.

Monitor and maintain:

Finally, you need to monitor the system and maintain it to ensure that it continues to work as expected. This involves monitoring performance metrics and making adjustments as needed to keep the system running smoothly.

Distributed computing in distributed systems can be a complex and challenging task, but it offers significant benefits in terms of speed, reliability, and scalability. By following the steps outlined above, you can design, implement, and maintain a distributed computing system that meets your needs and solves your problem efficiently.

Microservices and Distributed Computing

Distributed computing and microservices are two concepts that are closely related. Microservices architecture is a distributed computing approach that emphasizes the development of small, independent services that communicate with each other using well-defined APIs.

In a microservices architecture, the different services are deployed independently and communicate with each other through various communication protocols, such as REST, message queues, or event-driven architectures. Each service typically has its own database, and the services are designed to be loosely coupled, allowing them to be changed or updated without affecting the other services in the system.

Distributed computing plays a crucial role in enabling the communication and coordination between the various microservices. Because the services are deployed on different servers or containers, the communication between them needs to be designed in a way that ensures reliability, scalability, and fault tolerance.

For example, when a user makes a request to a microservice, the request is first routed to a load balancer, which then distributes the request to one of several instances of the microservice. The microservice then processes the request and may need to communicate with other microservices to complete the operation. The communication between the microservices is typically done using REST APIs, message queues, or other communication protocols.

Distributed computing plays a critical role in enabling the communication and coordination between the different services in a microservices architecture. The distributed nature of microservices requires careful design of the communication protocols, deployment architecture, and monitoring and management tools to ensure the reliability, scalability, and fault tolerance of the system.

No comments: