Together Launches OpenChatKit - The First Open-Source Alternative to ChatGPT

Together is building an intuitive platform to bring together data, models, and computation, empowering researchers, developers, and companies to harness and enhance artificial intelligence. Recently they have released an open source alternative to ChatGPT called OpenChatKit. Since the arrival of ChatGPT, everyone has started paying attention to large language models. While many are focused on industry leaders like OpenAI, Google, and Meta, it's worth noting that there are now open-source LLMs readily available for use, such as OpenChatKit.

OpenChatKit version 0.15 is released with an Apache-2.0 license so you get full access to source code, model weights and training datasets. OpenChatKit is also based on Large Language Model (LLM) just like ChatGPT. A Large Language Model (LLM) is a type of artificial intelligence language model that is capable of processing and generating human-like language at a large scale. These models are designed to understand and generate natural language text in a way that is similar to how humans communicate, with the goal of enabling machines to comprehend and generate text in a more human-like way.

LLMs are typically created using deep learning techniques, which involve training a neural network on vast amounts of text data. This training process allows the model to learn patterns and relationships between words and phrases, and to develop a strong understanding of the structure and context of natural language.

OpenChatKit comes with a base bot along with the building blocks to build tailored chatbots from this base. Developers can utilize OpenChatKit to customize the model, retain dialogue context, monitor replies, and easily create bespoke chatbot applications. The OpenChatKit comes with a language model fine-tuned with 20 billion parameters specifically for instructional purposes, a moderation model with 6 billion parameters, and a flexible retrieval system that can incorporate current responses from custom repositories.

The OpenChatKit is powered by an instruction-tuned large language model, fine-tuned from EleutherAI's GPT-NeoX-20B, which has been trained on over 43 million instructions using 100% carbon-negative compute. With the customization techniques, one can fine-tune the model to achieve high accuracy for specific tasks.

Additionally, the extensible retrieval system enables the bot to access information from various live sources, such as document repositories or APIs, to augment its responses. To ensure a safe and appropriate conversation, a moderation model from GPT-JT-6B is fine-tuned, which filters out certain questions that the bot should not respond to.

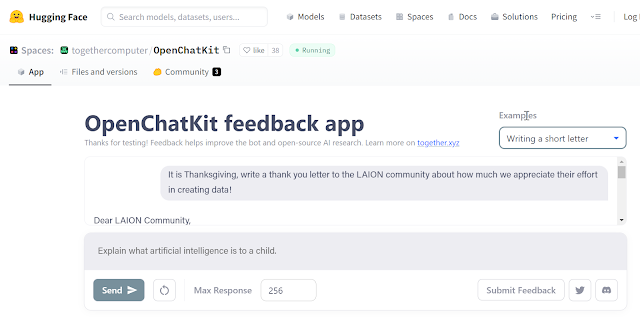

You can read more about this official announcement from Together in their blog post. OpenChatKit is available to try by visiting - https://huggingface.co/spaces/togethercomputer/OpenChatKit. You can check the OpenChatKit source code on GitHub - https://github.com/togethercomputer/OpenChatKit

The repository contains code for the following tasks:

- Training an OpenChatKit model

- Testing inference using the trained model

- Enhancing the model with extra context sourced from a retrieval index.

No comments: