Google's MusicLM: A Game-Changing Breakthrough in AI Music Generation

Music is one of the most expressive and creative forms of human communication, but also one of the most challenging to model computationally. Generating music that is coherent, diverse, and faithful to a given style or mood requires a deep understanding of musical structure, harmony, timbre, and emotion. While there have been many attempts to create artificial intelligence (AI) systems that can compose music, most of them have been limited by low audio quality, short duration, or lack of control over the musical content.

However, a recent breakthrough by Google researchers may change the game of AI music generation. In today's blog post, we will cover a model from Google in this space. In a paper published in January 2023, they introduced MusicLM, a new generative AI model that can create minutes-long musical pieces from text descriptions, such as "a calming violin melody backed by a distorted guitar riff". MusicLM can also transform a hummed or whistled melody into a different musical style and output music at 24 kHz, which is the standard sampling rate for high-fidelity audio.

MusicLM is based on a hierarchical sequence-to-sequence model, which means that it processes the input text and the output audio as sequences of tokens at different levels of abstraction. For example, the input text is first converted into semantic tokens that represent musical concepts like genre, mood, instruments, and tempo. Then, these semantic tokens are used to generate acoustic tokens that represent short segments of raw audio waveforms. Finally, these acoustic tokens are decoded into high-quality audio samples using a neural vocoder.

The advantage of this hierarchical approach is that it allows MusicLM to capture both the global structure and the local details of music. It also enables MusicLM to be conditioned on both text and melody, which means that it can transform whistled and hummed melodies according to the style described in a text caption. For instance, given the text "a fusion of reggaeton and electronic dance music" and a whistled melody as input, MusicLM can produce an audio output that matches both the text and the melody.

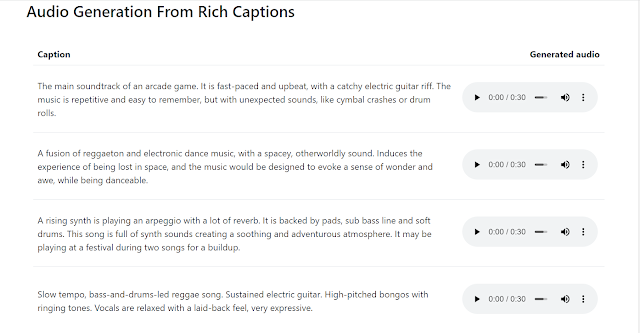

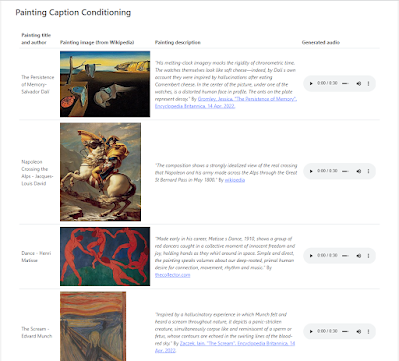

To demonstrate the capabilities of MusicLM, Google researchers have created a website where they showcase some examples of music generated by the model. The website features 30-second snippets of music created from paragraph-long descriptions that prescribe a genre, vibe, and specific instruments. It also features five-minute-long pieces generated from one or two words like "melodic techno" or "swing". Moreover, the website includes a demo of "story mode", where MusicLM is given a sequence of text prompts that influence how it continues the music. For example, given the prompts "time to meditate", "time to wake up", "time to run", and "time to give 100%", MusicLM can generate a musical piece that changes accordingly.

MusicLM is not only impressive in terms of audio quality and diversity, but also in terms of adherence to the text description. Google researchers have conducted several experiments to evaluate how well MusicLM follows the text prompts, and they have found that it outperforms previous systems both in human ratings and objective metrics. They have also compared MusicLM with other state-of-the-art models for unconditional music generation (i.e., without text input), and they have shown that MusicLM can generate longer and more coherent music than its competitors.

MusicLM is a remarkable achievement in AI music generation, but it is not without limitations. For example, MusicLM can only generate instrumental music without vocals or lyrics. It also cannot handle complex text inputs that contain contradictions or ambiguities. Furthermore, MusicLM may not always respect the musical conventions or expectations of certain genres or cultures. Therefore, Google researchers acknowledge that there is still room for improvement and refinement in their model.

To support future research in this field, Google researchers have also released MusicCaps, a new dataset composed of 5.5k music-text pairs with rich text descriptions provided by human experts. MusicCaps covers a wide range of musical genres and styles, and it can be used to train and evaluate models for conditional music generation. Google researchers hope that MusicCaps will inspire other researchers and musicians to explore new ways of creating and interacting with music using AI.

MusicLM is an exciting example of how AI can augment human creativity and expression. By generating music from text descriptions, MusicLM opens up new possibilities for musical exploration and experimentation. It also challenges us to rethink what music is and how we relate to it. As Google researchers write in their paper: "We believe that generating high-fidelity music from natural language will enable novel forms of human-machine collaboration and communication through sound."

No comments: