Navigating the Path to Responsible AI: Insights from OpenAI CEO's Congressional Testimony

The development of artificial intelligence (A.I.) has raised many ethical and social concerns, especially as the technology becomes more powerful and ubiquitous. Some of the issues include the potential impact of A.I. on human rights, privacy, security, employment, and accountability. To address these challenges, lawmakers and regulators have been seeking to establish rules and guidelines for the responsible use and governance of A.I.

One of the key players in the field of A.I. is OpenAI, a research organization that aims to create and promote "friendly" A.I. that can benefit humanity without causing harm or being influenced by malicious actors. OpenAI was founded in 2015 by a group of prominent tech entrepreneurs and investors, including Elon Musk, Peter Thiel, Reid Hoffman, and Sam Altman. Altman, who was previously the president of Y Combinator, a startup accelerator, became the chief executive of OpenAI in 2019.

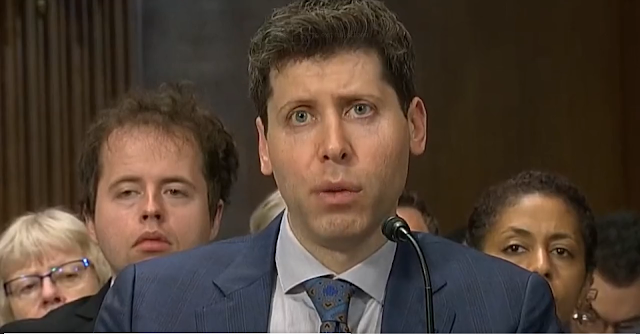

On Tuesday, May 16, 2023, Sam Altman, testified before members of a Senate subcommittee hearing and largely agreed with them on the need to regulate the A.I. technology being created inside his company and others like Google and Microsoft. Altman, who leads the San Francisco start-up behind the viral chatbot tool ChatGPT, warned that A.I. could pose "immense promise and pitfalls" for society and humanity, and urged lawmakers to adopt sensible standards and principles to navigate this uncharted territory.

He also acknowledged his own fears about the potential disruption to the labor market and the spread of misinformation by A.I. systems. The hearing, chaired by Senator Richard Blumenthal, a Democrat from Connecticut, marked Altman's first appearance before Congress and his emergence as a leading figure in A.I. development. He was joined by other experts and executives from IBM, who advocated for a "precision regulation" approach based on specific use cases and transparency measures for A.I. products.

Altman expressed his support for the government's role in ensuring that A.I. is developed and deployed in a safe and ethical manner. He said that OpenAI shares the same vision and values as the lawmakers, and that his organization is committed to transparency and collaboration with other stakeholders. He also acknowledged that A.I. poses significant challenges that require careful attention and action. Dr. Marcus Challenges OpenAI's Lack of Data Transparency and Questions Job Replacement Claims in Conversation with Mr. Altman.

One of the main topics of discussion was the regulation of large-scale A.I. systems, such as GPT-4, a natural language processing model that can generate coherent and diverse texts on any topic. GPT-4 is one of the flagship projects of OpenAI, and it has been widely praised for its impressive capabilities and applications. However, it has also raised concerns about its potential misuse and abuse, such as generating fake news, spam, propaganda, or hate speech.

Altman agreed that GPT-4 and similar systems should be subject to regulation and oversight, and that OpenAI is willing to cooperate with the authorities and follow the best practices. He said that OpenAI has implemented several measures to prevent and mitigate the harmful effects of GPT-4, such as limiting its access to authorized users, monitoring its outputs, and providing feedback mechanisms for reporting issues. He also said that OpenAI is working on developing tools and standards for measuring and ensuring the quality, reliability, fairness, and safety of GPT-4.

Altman also emphasized that GPT-4 is not a threat to human intelligence or creativity, but rather a tool that can augment and enhance human capabilities. He said that GPT-4 is designed to be a general-purpose system that can assist users in various tasks and domains, such as education, research, entertainment, and business. He said that GPT-4 is not intended to replace human writers or thinkers, but to help them generate new ideas and insights.

During the congressional hearing, technology companies emphasized the need for cautious consideration of broad regulations that categorize different types of A.I. under a single umbrella. IBM's Ms. Montgomery specifically recommended an A.I. law modeled after Europe's proposed regulations, which delineate varying levels of risk. Instead of regulating the technology itself, she urged for rules that target specific applications of A.I.

According to Ms. Montgomery, "A.I. is fundamentally a tool with the potential to serve diverse purposes." She further emphasized the importance of adopting a "precision regulation approach to A.I." to address its nuanced applications effectively.

Altman's testimony was largely well-received by the senators, who praised him for his leadership and vision in advancing A.I. research and innovation. They also expressed their interest in working with him and other experts in crafting legislation and policies that can foster the development of beneficial A.I. while protecting the public interest and values.

Altman said that he believes that AI has the potential to be a "force for good" but that it also has the potential to be used for harm. He said that he is concerned about the potential for AI to be used to create deepfakes, to spread misinformation, and to automate jobs.

Altman said that he believes that the best way to address AI concerns is through regulation. He said that he supports the creation of an independent agency that would be responsible for regulating AI. He said that this agency would need to have the authority to set standards for AI development, to conduct research on AI, and to investigate potential abuses of AI. He also expressed concern over AI's potential influence on the 2024 presidential elections and emphasizes the need for appropriate regulation.

Altman's testimony was met with a positive response from lawmakers on the subcommittee. Senator Richard Blumenthal, the chairman of the subcommittee, said that Altman's testimony was "thoughtful and insightful." He said that he believes that Altman's proposal for an independent AI regulatory agency is "a good starting point" for a discussion about how to regulate AI.

LIVE: OpenAI CEO Sam Altman testifies to Senate on potential AI regulation https://t.co/NZVcz3BHLk

— Reuters (@Reuters) May 16, 2023

It is still too early to say what the future of AI regulation will look like. However, Altman's testimony is a sign that the tech industry is starting to take the issue of AI regulation seriously. It is likely that we will see more discussion and debate about AI regulation in the coming months and years.

Here are some of the key takeaways from Altman's testimony:

- Altman believes that AI has the potential to be a "force for good" but that it also has the potential to be used for harm.

- Altman is concerned about the potential for AI to be used to create deepfakes, to spread misinformation, and to automate jobs.

- Altman supports the creation of an independent agency that would be responsible for regulating AI.

- Altman believes that this agency would need to have the authority to set standards for AI development, to conduct research on AI, and to investigate potential abuses of AI.

Altman's testimony is a significant step forward in the conversation about AI regulation. It is important for the tech industry, policymakers, and the public to continue to discuss this important issue.

No comments: