Stanford Unveils Alpaca: A Top-Performing Open-Source Instruction-Following Model

Stanford University has released a new open-source instruction-following model called Alpaca. This advanced model has been fine-tuned from the LLaMA 7B model and promises to be a strong performer in the field of instruction-following models such as OpenAI's GPT-3.5 (text-davinci-003), ChatGPT and Bing Chat from Microsoft. It is possible to construct a chat AI similar to GPT-3.5 using Alpaca 7B, which is an open-source and cost-effective method.

Recently Together launched an open source alternative to ChatGPT and Microsoft launched Copilot and Bing AI Chatbot. The AI industry is currently experiencing a lot of activity and progress, not just from major players such as OpenAI, Meta, Microsoft, and Google, but also from open-source communities. Although instruction-following models have their uses, they suffer from a number of shortcomings, such as their tendency to generate misinformation, reinforce social stereotypes, and generate harmful language.

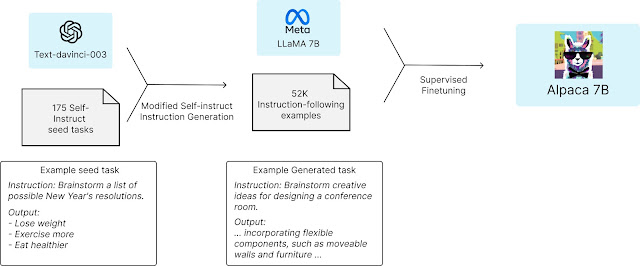

A group from Stanford is trying to tackle these issues by introducing this instruction-following language model "Alpaca", which is fine-tuned from Meta's LLaMA 7B model. To train the Alpaca model, they used 52,000 demonstrations of instruction-following that were generated in the form of self-instruction using text-davinci-003. When evaluated on the self-instruct dataset, the Alpaca model demonstrates many of the same qualities as OpenAI's text-davinci-003, yet it is remarkably compact and straightforward to replicate at low cost.

As per Stanford, the training recipe and data will be made publicly available, and there is a plan to release the model weights in future. Additionally, there is an interactive demo to help researchers gain a better understanding of Alpaca's behavior. By allowing users to interact with the model, the idea is to uncover the unexpected capabilities and identify areas for improvement. Users are free to report any problematic behavior they observe in the web demo, which will help the team to address and prevent such issues in the future.

It is important to note that Alpaca is intended for academic purposes only and any commercial use is not permitted at the moment as it's not yet ready for that. As per Stanford, Alpaca, like many other language models, displays various limitations, such as generating false information, using toxic language, and propagating stereotypes. In particular, the model tends to experience hallucination more frequently, even when compared to text-davinci-003.

There was an interactive demo setup that seems to be suspended and not working at the moment. You can refer to Alpaca Github page https://github.com/tatsu-lab/stanford_alpaca for more information. The Stanford Alpaca page can also be referred for more details on this announcement and the model.

No comments: